Learning the max pressure control for urban traffic networks considering the phase switching loss

Abstract

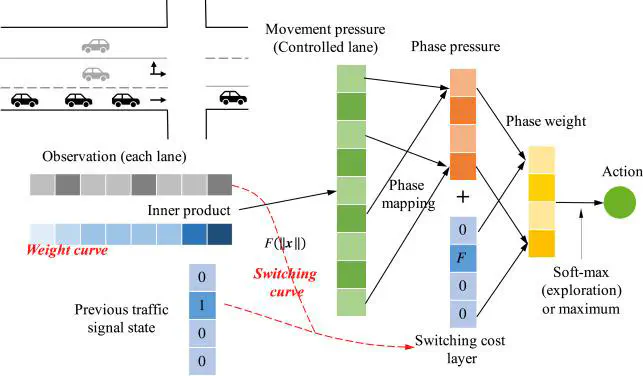

Previous studies have shown that the max pressure control is a throughput-optimal policy that can stabilize the store-and-forward traffic network when the demand is within the network capacity. Most of the existing studies on the max pressure control do not consider the loss of capacity associated with phase switching, which will undermine the stability of the network. This work proposes a novel framework that utilizes reinforcement learning algorithms to optimize a max pressure controller considering the phase switching loss. We extend the max pressure control by introducing a switching curve and prove that the proposed control method is throughput-optimal in a store-and-forward network. Then the theoretical control policy is extended by using a distributed approximation and position-weighted pressure so that the policy-gradient reinforcement learning algorithms can be utilized to optimize the parameters in the policy network including the switching curve and the weight curve. Simulation results show that the proposed control method greatly outperforms the conventional max pressure control. The proposed framework combines the strengths of the data-driven method and the theoretical control model by utilizing reinforcement learning algorithm to optimize the max pressure controller, which is also of great significance for real-world implementations because the proposed control policy can be generated in a distributed fashion based on local observations.